2024 Robotics Student Fellows

Research Overview

When a ground vehicle navigates through a busy city, how does it predict if a person rushing by might cross its path? One approach is to represent objects and their relationships explicitly. This involves modelling how objects look (photometry), their shapes (geometry), and their behaviour (semantics). A cutting-edge model called semantic 3D Gaussian Splatting does this by placing tiny Gaussians —each representing part of an object— at specific 3D coordinates, creating detailed, photo-realistic scenes. But associating complex labels to millions of these tiny Gaussians can be memory-intensive, making it tricky for robots that constantly encounter new environments.

I worked on a solution to simplify this by assigning one semantic label per object, rather than to each tiny Gaussian. The challenge was ensuring that, even though a robot only sees parts of an object at a time, it can correctly merge pieces of the same object while avoiding mistakes overtime.

Besides this, I also explored how robots could navigate using only semantic data —those complex labels— which was a lot of fun, but that's a story for another time!

Research Overview

Humanoid robotic hands face challenges in the manipulation of unknown objects, especially in adapting to varying shapes and levels of stiffness. Accurate proprioception and tactile sensing are critical for effective manipulation. My work at the Soft Robotics Lab focuses on improving the proprioception of a tendon-driven robotic hand by extending sensor integration of Inertial Measurement Units (IMUs) and tactile sensors to the entire hand. IMUs were mounted on flexible printed circuit boards along the phalanges to measure joint angles, while tactile sensors were positioned on the inner side of the fingers to detect forces in three directions. Our experiments showed that there is a discrepancy between the motor-estimated joint angles and the IMU measurements when the robotic hand grasps an object and preliminary results suggested that this discrepancy could serve as a predictive indicator of object grasping. Future work includes using this sensor integration to enhance control feedback, enabling the robotic hand to adjust grips, detect collisions, and improve manipulation.

Research Overview

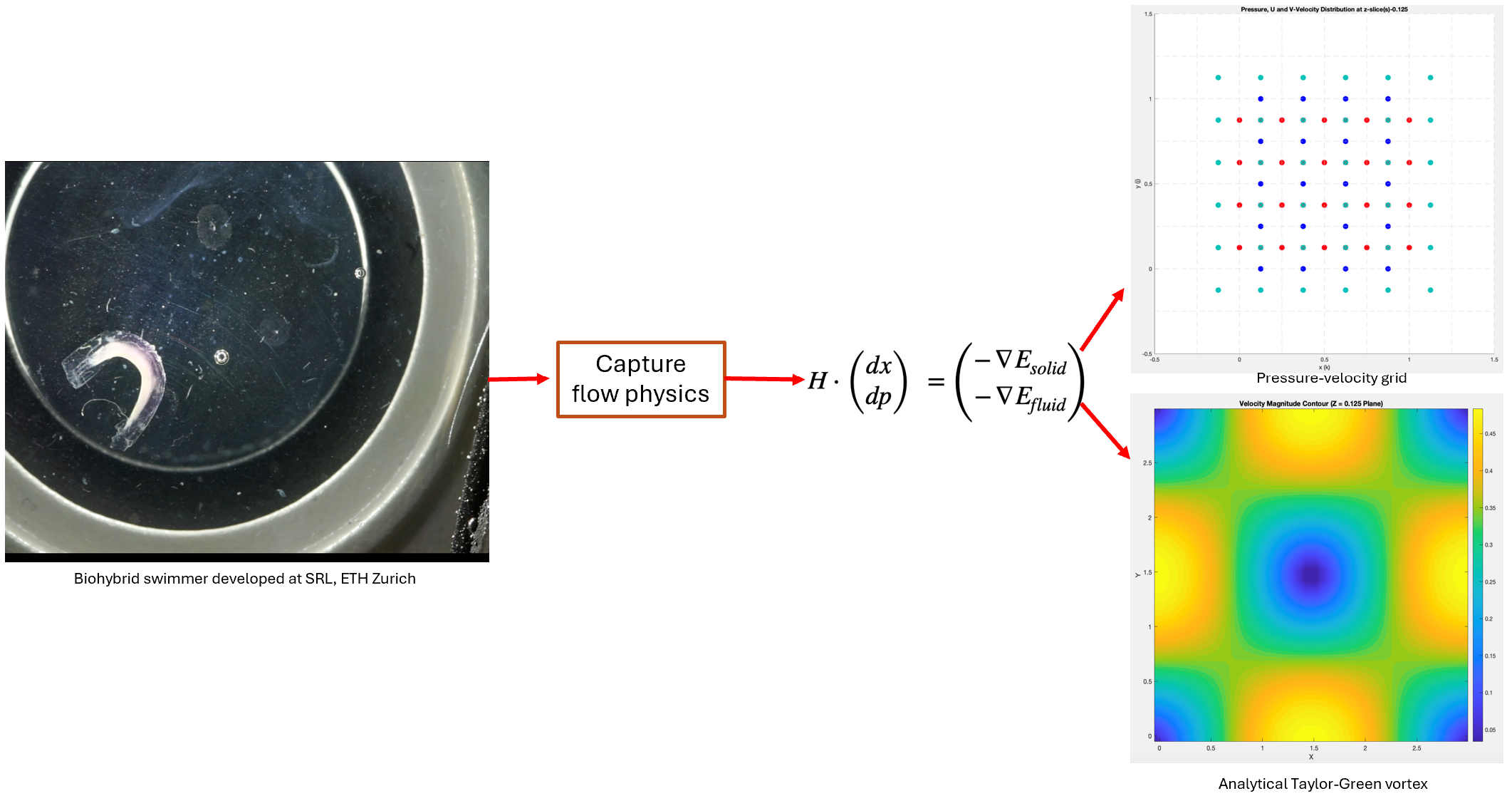

Biohybrid robots are an emerging class of soft robots which are constituted by artificial living tissues. They can be deployed in a wide range of applications such as motile swimmers or muscle rehabilitation. In order to better grasp how biohybrids function, it is useful to develop a biophysically faithful numerical framework which can simulate the robot's motion and interactions with its surroundings. An important component of the surroundings is the fluid in which the robots move, whose effects on the robot are complex yet necessary to understand. However, numerically resolving the entire flow field for fluid-structure interaction scenarios can be challenging and time-consuming. We propose a variational approach to incorporating fluid-solid coupling into the SRL’s in-house simulation framework (SRL-SIM), by formulating the coupling as a boundary-interaction energy and adding the fluid to the existing energy-minimization framework. We propose expanding the existing Newton solve to consider the kinetic energy of the fluid and an energy at the boundary which enforces the no-penetration boundary conditions. This introduces fluid pressure as a second variable into the system, and we minimize now with respect to solid position and fluid pressure both. The introduction of additional energies of the fluid and the coupling to the original matrix system incorporates the surrounding fluid's influence on the swimmer's locomotion, while keeping the solve to a single matrix equation, thus potentially preventing a significant increase the computation time. This approach increases the modularity and functionality of the SRL-SIM framework.

Research Overview

Despite significant research in ground robot navigation algorithms, many systems still struggle in highly cluttered and constrained environments. Historically, evaluating and comparing these algorithms has also been difficult due to the lack of widely adopted standardized testing methods. The Benchmark Autonomous Robot Navigation (BARN) Challenge addresses this gap by providing a unified platform for benchmarking and enhancing state-of-the-art navigation systems in demanding settings. It offers a standardized robot setup, performance metrics for navigation assessment, and a comprehensive dataset of environments ranging from open spaces to densely cluttered areas.

During my fellowship, I focused on benchmarking some of the latest navigation algorithms developed in the Autonomous Systems Lab using the BARN framework. Specifically, I evaluated a reactive LiDAR navigation algorithm based on Riemannian Motion Policies developed by Michael Pantic et al. Preliminary results suggest that integrating this algorithm with the Vector Field Histogram Plus (VFH+) algorithm yields notable improvements in performance in cluttered environments. Additionally, I contributed to the ESA SUPPORTER project by developing the robot's body odometry estimation module, enhancing its motion-tracking capabilities

Research Overview

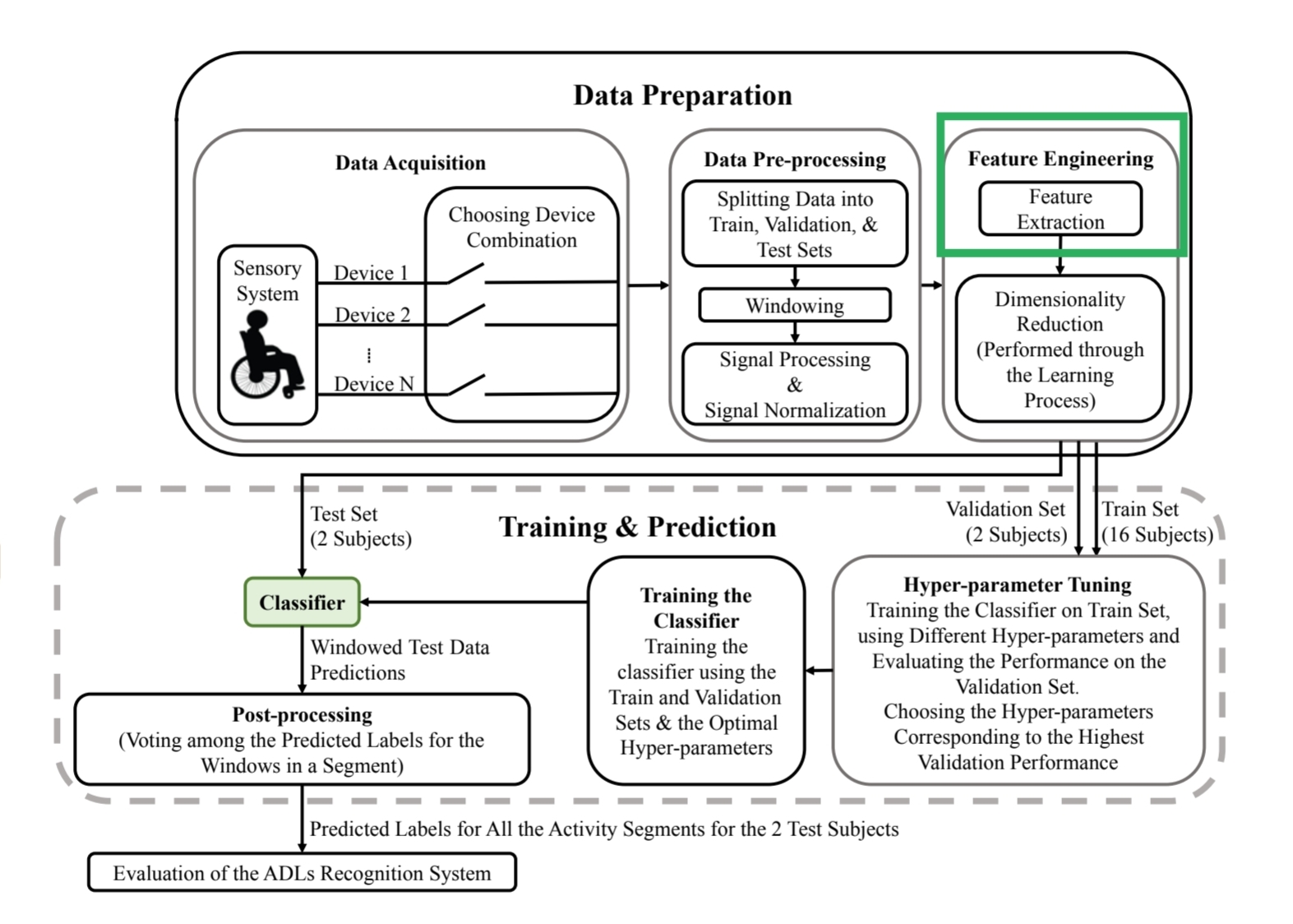

Spinal Cord injury patients experience systemic changes that pose a challenge to sensory and motor functions. Due to this, patients are prone to Secondary health conditions and, therefore, require constant monitoring to prevent the onset of comorbidities. Activities of Daily Living – daily activities like resting, eating and toileting are good indicators of comorbidities. Some ADLs indicate the onset of comorbidities, and others are affected by comorbidities. Direct surveillance of SHCs is often impractical; hence monitoring ADLs allows indirect surveillance of SHCs.

Monitoring ADLs of wheelchair users can be done with a set of unobstructive sensors. Data from sensors, when collected over time, form a time series, which is then used to train classifier models for activity classification. The highly dimensional nature of time series data necessitates feature extraction, in which extracted features are used to train models.

This study seeks to create a toolbox that extracts features from time series data that are pertinent to ADL classification. Features are extracted across the statistical, spectral, and time-frequency domains. Although this toolbox focuses on ADL classification, we aim to extract features that make it as comprehensive as possible to be used in different fields. Currently the toolbox extracts ~700 features.

"I want to express my sincere appreciation to the RSF program for this incredible opportunity to delve into the world of cutting-edge research, since it’s such an honor for me to study and research with distinguished scholars in Intelligent Control System Lab of ETH. And the sincere appreciation is conveyed to my mentor and supervisor for their kindness, guidance and support"

Research Overview

Multi-agent system has garnered significant attention in recent years due to its wide-ranging applications across various areas including robotics, transportation, and telecommunications. For example, teams of robots can collaborate to perform complex tasks such as searching and rescuing missions, warehouse automation, and agricultural management. But there stills exist some challenges in controlling the largescale multi-agent system, which is mainly because of high computational consumption and the interaction between agents.

Distributed predictive control provides a promising solution to this problem. In the framework of distributed predictive control, the agents could exchange information with each other, such as reference trajectory. Furthermore, all the agents reach an agreement that they will stay around the reference trajectory and ensure the deviation within bounded set “contract”. But still the exchanged information is different from the actual information, therefore robust tube MPC is leveraged to guarantee the performance, in which the set “tube” should be designed.

Calculation of those sets are crucial procedure for distributed predictive control, however, existing methods are complicated and hard to implement. Thus, we proposed an optimization-based method to simplify this procedure. By appropriately designing optimization objective and constraints, agents could have more freedom to plan trajectories with less deviation. The results demonstrates that this method could automatically design the set, while guaranteeing good closed-loop performance.

Research Overview

My team and I are working on two parallel projects for agile but stable legged robots using reinforcement learning. On the one hand, we have been focusing on building an agile robots by integrating the vision information with the robot's control policy. Specifically, we obtained terrain information using motion capture devices so that the robot can dynamically overcome the obstacles. On the other hand, we have been working on stable control learning process by combining optimal-control approach and learning-based approach, which we refer to as a hybrid approach. In detail, we solved optimal-control to generate physically feasible motion data, and subsequently utilize it to guide the learning process of the control policy.

In conclusion, we established two essential pillars for both the agility and stability of the robots, and we look forward to extending this work to achieve even more agile and stable robots.

"My time at ETH in the Robotic Systems Lab shaped my perspective on what I want to pursue in the future. I am very grateful to have had the opportunity to experience what it is like to do research among such motivated and talented people, and for all the mentorship I received. It was a great and impactful summer, and I’d highly recommend it!"

Research Overview

For legged robots, navigating rough terrain poses inherent difficulties, complicating their ability to move around efficiently and safely. Classical robot navigation systems typically rely on exteroception and struggle with false positive perception failures, especially in environments characterized by ambiguous geometry, such as tall grass, dense foliage, or fences. In real-world missions, it is critical for the robot to carefully explore these environments to ensure that it navigates successfully while fulfilling its mission objectives. This work models false positive failures as curtains in a simulated room with solid walls, leveraging a reinforcement learning-based local navigation policy. The policy integrates failure-prone exteroception with proprioception, allowing the robot to correct its environmental belief and use exploration to investigate its surroundings. The approach is validated through simulation, demonstrating improved navigation performance in the presence of false positive perception failures, with the system performing comparably to traditional planners when no failures are present.