Past Research

2023 Funded Projects

The ALLIES project (Assistant Legged mobiLe manIpulators Enabled with Language modelS) aimed to integrate Large Language Models (LLMs) with legged robotic platforms to enhance autonomous navigation and manipulation capabilities in complex environments. By leveraging LLMs, the project sought to enable robots to perform high-level reasoning, semantic understanding, and efficient path planning, thereby improving their adaptability and performance in real-world tasks.

Principal Investigator

Prof. Marco Hutter

Duration

01.07.2023 - 01.01.2025

Most important achieved milestones

1. Development of Tag Map

Designed an explicit text-based mapping system capable of representing thousands of semantic classes. This innovation facilitates seamless integration with LLMs for spatial reasoning and navigation, enabling robots to interpret and interact with their environments more effectively.

2. Implementation of IPPON Framework

Introduced a novel informative path planning approach guided by common sense reasoning derived from LLMs, enhancing object goal navigation in unexplored environments.

3. Real-World Validation

Successfully deployed and tested the developed systems on legged robots, demonstrating improved autonomy and decision-making in dynamic settings.

Most important publications

- Mike Zhang, Kaixian Qu, Vaishakh Patil, Cesar Cadena, Marco Hutter (CoRL 2024): Tag Map: A Text-Based Map for Spatial Reasoning and Navigation with Large Language Models

- Kaixian Qu, Jie Tan, Tingnan Zhang, Fei Xia, Cesar Cadena, Marco Hutter (3rd Workshop on Language and Robot Learning: Language as an Interface): external page IPPON: Common Sense Guided Informative Path Planning for Object Goal Navigation

Links to images and videos

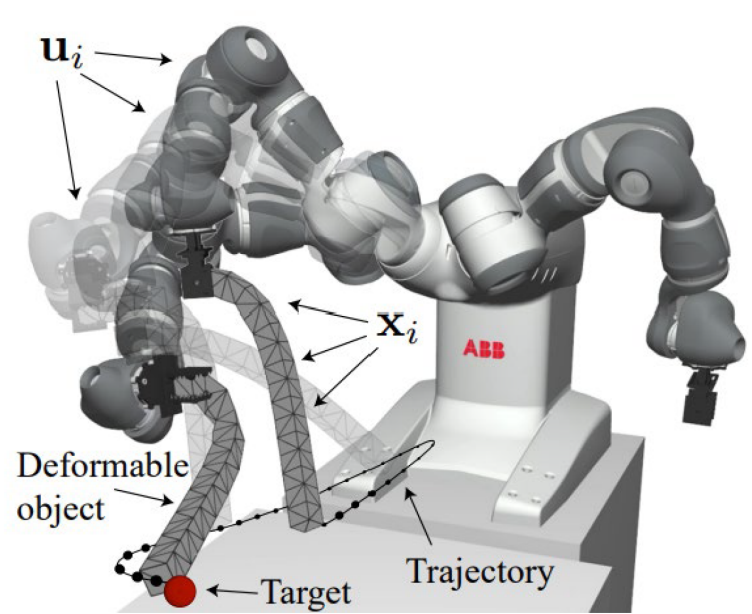

Dexterous manipulation of deformable objects stands as one of the grand challenges in modern robotics. Deformable objects in particular exhibit complex dynamics, and goal conditioned contact and motion planning promises to revolutionize manufacturing, garment and health care industries. This project aims to investigate and formalize the decision-making processes needed for robotic manipulation of deformable objects.

Principal Investigator

Prof. Stelian Coros

Duration

01.07.2023 - 01.01.2025

Most important achieved milestones

1. An optimization based formulation for task planning that enables changes in the kinematic tree to be treated with gradient based optimization methods. Published in IROS 2023.

2. An efficent model abstraction for deformable objects that enables long-horizon motion planning problems to be solved efficiently. The paper considers linear deformable objects as a case study and features robot experiments conducted with our ABB YuMi. Submitted to IROS 2025, in review.

3. A combined contact- and motion planning algorithm that produces robust trajectories leveraging graphics processors. Submission (RA-L) in progress.

Most important publications

- Jimmy Envall, Roi Poranne, Stelian Coros (IROS 2023): Differentiable Task Assignment and Motion Planning

- In review (IROS 2025)

- In preparation (RA-L)

Links to images and videos

- Differentiable Task Assignment and Motion Planning

- Video not yet available

- Video not yet available

Tilt-rotor Aerial Robots enable precise aerial manipulation by using servo-actuated arms with propellers to achieve generic and redundant thrust vectoring. However, control and modeling mismatches, especially in servomotor dynamics, limit their potential. This project addressed these challenges by applying data-driven modeling techniques for actuator identification and integrating the resultsinto high-fidelity simulations. Key outcomes include a high fidelity actuator model, the first demonstrated end-to-end reinforcement learned controllerfor aerial manipulation, and advancements in sim-to-real transfers. Additionally, the insights and data obtained in this project enabled us tosynthesize a complete and novel overarching theory for actuator model awarecontrol allocation, which will be published in an upcoming T-RO journal paper. These important innovations significantly contributed towards making Aerial Robots more precise and robust.

Principal Investigator

Prof. Roland Siegwart

Duration

01.07.2023 - 01.10.2024

Most important achieved milestones

1. End-to-End RL for Aerial Robots (WP 3)

In this project, wedemonstrated the first successful sim-to-real transfer of a completelyend-to-end learned RL controller for overactuated aerial robots, thatshowed robustness against model deviations and chaotic and turbulentairflow interference. In contrast to a classical approach, the RL controlleris able to control the robot without re-tuning or adjusting parameters –the equivalent classical controller has about 40 tuning parameters. TheRL controller also explored novel ways in how the over-actuation of thesystem is used in flight

2. Actuator Models & Simulation (WP 1&2)

Through extensive researchand multiple student projects, we investigated various approaches toidentify our actuators (propellers, servos) to a high standard. This notonly yielded much better models for control and simulation, but also amuch deeper understanding of the dynamics and energy balanceoccurring inside servo motors loaded with vibrating propellers, and thebrush-less propeller controller itself. It also influenced the hardwaredesign heavily, we additionally explored how the hardware can beadjusted to be easier to model.

3. Overarching allocation theory (WP 4)

The developed models andknowledge contributed towards formulating a novel, dynamics andpower-aware control allocation theory for Aerial Robots. With thismethod, we are able to solve the main allocation problem with dynamicswithout any artificial secondary optimization objectives. Doing so yields amathematical formulation that allows us to explicitly derive the null-space of the allocation and use this for context-aware secondary targetsformulated by the higher level autonomy stack. An example would be toinfluence the allocation formulation to use the over-actuation andredundancy to be less efficient but more robust to e.g. potential airflowdeflections detected based on surrounding geometry as seen by theperception module.

Most important publications

- E. Cuniato, O. Andersson, H. Oleynikova, R. Siegwart, M. Pantic (ISER 2023): Learning to Fly Omnidirectional Micro Aerial Vehicles with an End-To-End Control Network

- E. Cuniato, M. Allenspach, T. Stastny, H. Oleynikova, R. Siegwart, M. Pantic (IEEE Transaction on Robotics, will be submitted very soon): Allocation for Omnidirectional Aerial Robots: From Geometric to PowerDynamics

Links to images and videos

external page Full size image of the robot flying in disturbance

external page Video of end-to-end controller

Video of second paper to be published soon

2022 Funded Projects

Project Description

The main objective of this project is to investigate the benefits and challenges of targeted 3D and semantic reconstruction and to develop quality-adaptive semantically guided Simultaneous Localization and Mapping (SLAM) algorithms. The goal is to make an agent (e.g., Boston Dynamics Spot robot) able to navigate and find a target object (or other semantics) in an unknown or partially known environment while reconstructing the scene in a quality-adaptive manner. We interpret being quality-adaptive as making the reconstruction accuracy and detailedness dependent on finding the target class – i.e., reconstruct only until we are certain that the observed object does not belong to the target class.

Principal Investigator

Prof. Marc Pollefeys

Dr. Iro Armeni

Dr. Daniel Barath

Duration

01.09.2022 - 01.03.2024 (18 months)

Most important achieved milestones

1. Quality-Adaptive 3D Semantic Reconstruction. An algorithm for quality-adaptive semantic reconstruction was designed. This algorithm employs a multi-layer voxel structure to represent the environment. Each voxel encapsulates a truncated signed distance function (TSDF) value indicating the distance to the nearest 3D surface, alongside color, texture information, surface normal, and potential semantic classifications. Adaptive voxel subdivision into eight smaller voxels is governed by multiple criteria, including predefined target semantic categories. This approach allows users to delineate objects requiring high-resolution reconstruction from those less critical for the task at hand. The algorithm categorizes resolution into three levels: coarse (8 cm voxel size), middle (4 cm), and fine (1 cm), adjustable based on task requirements. Furthermore, a criterion based on geometric complexity has been established, facilitating the high-quality automatic reconstruction of complex structures irrespective of their semantic classification.

Our current extension on this method entails separating the SLAM reconstruction's geometric complexity from texture details, aiming for high-quality renderings without storing excessively detailed geometry. This is particularly relevant for simple geometries with complex textures, where current methods result in unwarranted reconstruction complexity and substantial storage demands. The proposed solution involves utilizing a coarse, adaptable voxel structure for geometry, with color data in 3D texture boxes, leveraging a triplanar mapping algorithm for enhanced rendering quality with minimal geometric detail.

2. An algorithm was introduced to enhance Voxblox++ significantly, enabling high-quality, real-time, incremental 3D panoptic segmentation of the environment. This method combines 2D-to-3D semantic and instance mapping to surpass the accuracy of recent 2D-to-3D semantic instance segmentation techniques on large-scale public datasets. Improvements over Voxblox++ include

- a novel application of 2D semantic prediction confidence in the mapping process,

- a new method for segmenting semantic-instance consistent surface regions (super-points) and

- a new graph optimization-based approach for semantic labeling and instance refinement.

3. Another significant contribution of the project is a novel matching algorithm that incorporates semantics for enhanced feature identification within a SLAM pipeline. This method generates a semantic descriptor from each feature's vicinity, which is integrated with the conventional visual descriptor for feature matching. Demonstrated improvements in accuracy, verified using publicly available datasets, underscore the method's effectiveness while maintaining real-time performance capabilities.

Most important publications

- Oguzhan Ilter, Iro Armeni, Marc Pollefeys, Daniel Barath (ICRA 2024): external page Semantically Guided Feature Matching for Visual SLAM

- Yang Miao, Iro Armeni, Marc Pollefeys, Daniel Barath (IROS 2024): external page Volumetric Semantically Consistent 3D Panoptic Mapping

- Jianhao Zheng, Daniel Barath, Marc Pollefeys, Iro Armeni (ECCV 2024): external page MAP-ADAPT: Real-Time Quality-Adaptive Semantic 3D Maps

Links to images and videos

Project Description

Within the project, we investigate representations of soft and/or articulated robots and objects to allow general manipulation pipelines. To apply these representations for real manipulation tasks, we develop a dexterous robotic platform.

Principal Investigator

Prof. Robert Katzschmann

Prof. Fisher Yu

Duration

01.07.2022 - 01.01.2024 (18 months)

Most important achieved milestones

1. We present ICGNet, which uses pointcloud data to create an embedding that contains both surface and volumetric information, and can be used to predict occupancy, object classes and physics or application specific details like grasp poses.

2. We developed a real time tracking framework for soft and articulated robots that allows for real time mesh construction from pointcloud data with point-wise errors that are almost an order of magnitude lower than the state of the art.

3. As an application platform, we constructed a dexterous robotic hand that is capable of precise and fast manipulation of objects.

Most important publications

Yasunori Toshimitsu, Benedek Forrai, Barnabas Gavin Cangan, Ulrich Steger, Manuel Knecht, Stefan Weirich and Robert K. Katzschmann (Humanoids 2023): Getting the Ball Rolling: Learning a Dexterous Policy for a Biomimetic Tendon-Driven Hand with Rolling Contact Joints

René Zurbrügg, Yifan Liu, Francis Engelmann, Suryansh Kumar, Marco Hutter, Vaishakh Patil and Fisher Yu (ICRA 2024): external page ICGNet: A Unified Approach for Instance-Centric Grasping

Elham Amin Mansour, Hehui Zheng and Robert K. Katzschmann (ROBOVIS 2024): external page Fast Point Cloud to Mesh Reconstruction for Deformable Object Tracking

Links to images and videos

external page ICGNet Architecture (CC BY-NC-ND 4.0)

external page Grasp prediction pipeline (CC BY-NC-ND 4.0)

external page Predicted grasps with ICGNet (CC BY-NC-ND 4.0)

external page Dexterous robotic hand demo video (Apache-2.0, BSD-3-Clause)

external page Robotic hands learning in simulation (Apache-2.0, BSD-3-Clause)

external page Robotic hands + rendering pointclouds for ICGNet in simulation (Apache-

2.0, BSD-3-Clause)